The online video streaming experience is still below par. Long load times, endless buffering, stalled videos, and pixellation often leads users to abandon videos. But Deep Learning and AI can dramatically improve this experience by paying attention to both the content of what is being streamed and the computational power of the device streaming it.

It’s 6.30 am. You are groggy but determined to watch the newest episode of Game of Thrones. However, just as the Great Battle is about to reach the fever pitch, the video pauses and begins to buffer. What was supposed to be an exhilarating experience, is now laborious.

A few agonizing seconds later, the video crawls ahead but at a lower resolution, barely showing who is killing whom in the show’s already dark atmosphere. You’re angry and frustrated. Why is online streaming so frustrating ?

Honestly, it shouldn’t be this frustrating. The power of Deep Learning and AI is ensuring that zero-delay, enhanced HD OTT streaming is no longer a distant reality.

Adaptive BitRate And Why It Is Not Enough

Over the top (OTT) content creators and providers — think Hotstar and TVF — are producing stunning content every week. But they have largely been unable to control the users’ Quality of Experience (QoE). QoE refers to the video startup time, the overall video resolution, and the number of times the video pauses or stalls. A smooth video delivery is painfully reliant on bandwidth (read: internet speed) where even a slight network variation drastically affects user experience.

Most content providers are tackling this challenge with an algorithm called Adaptive bitrate (ABR) streaming.

Here’s how it works: Say you’re watching a YouTube video on your smartphone via a mobile network on your commute to work. The video is streaming at a smooth 1080p, until suddenly the internet speed drops. Before ABR, the video would have stopped completely. But today, ABR is designed to handle unpredictable bandwidth variations. The video now switches to a lower resolution, say 480p, but doesn’t stop playing unless you completely lose the internet connection. This is because the ABR parameters have “adapted” or dynamically adjusted according to your network to keep the video playing. Simply: it has eliminated buffering by compromising on the video quality.

ABR is undoubtedly an improvement, but it’s still a slave to the internet speed.

It’s 2019, and conversations around artificial intelligence and machine learning are especially relevant. In improving online video streaming too, thankfully, newer solutions exist.

From Adaptive to Intelligent

Over the years, people have seen video transmission as an extension of data communication, such as text or email. As a result, improvements in video streaming have been viewed through a lens of data and network optimization.

The video streaming network today is blind to the content being streamed. The streaming algorithm may send a higher bitrate in a scene where characters are casually conversing , but “adapt” to a network limitation where high quality is paramount: such as in an action scene.

Imagine being able to see every wrinkle of Tyrion’s face as he raises a toast, but not being able to see anything beyond a highly pixelated dragon flying through the clouds as Jon Snow holds on to dear life.

This compels the question: what if we could be aware of the scene’s content? What if the encoding and streaming algorithms know the relative importance of QoE scene-by-scene?

What if we could also be aware of the computational power of the user’s smartphone and the context or the setting in which a particular video is being consumed?

The best answer we have to these what-ifs is Deep Learning.

Myelin Foundry, a deep tech foundry based out of Bangalore, is developing deep neural network (DNN)-based quality enhancement technologies that can be applied on video content to improve the user’s QoE.

Ganesh Suryanarayanan, Myelin Foundry’s Co-founder and CTO, says that content-aware AI can significantly improve user experience and provide a novel, immersive, and personalized viewing experience. “Additionally,” he elaborates, “device-aware deep neural nets can dynamically leverage mobile compute and graphic resources to enhance and scale user experiences”.

A video is encoded in streams that represent the highest quality. But today’s encoders only look at pixels in a frame when they could actually be looking at the entire video and learning what’s happening. By looking at the entire video, we can use the differing content redundancies to transmit the video intelligently, and significantly improve QoE.

Being Device Aware

The quality of the device plays an important role in the decoding. People prefer watching videos on-the-go, and for good reason because smartphones have as many features and are as powerful as laptops. If we know which device a person is watching the video on, we can utilize the unique capability of the device to provide a better experience.

Being Context Aware

It doesn’t end here. Deep learning could even pay attention to the context. So, if we know that a user is streaming Game Of Thrones at 6.30 am every week, or watches her favorite show while travelling, we can use that context to improve and personalize the user’s QoE.

The Business of Streaming

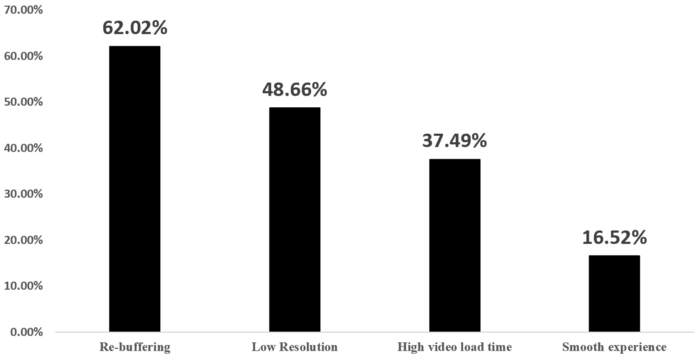

If you’re wondering why OTT platforms will invest in this technology, consider this: “We conducted a survey with 1000 people to understand the OTT streaming experience for urban viewers in India,” Aditi Olemann, Co-founder and Head of Marketing at Myelin Foundry says. “It was surprising that 62% people face buffering issues and get frustrated while watching OTT media while travelling’.

Now, more than ever, audiences have limited or no tolerance for pixellation, buffering, stalled videos, and long load times. They may simply walk away, and so, improving QoE has direct implications on revenue.

At the same time, online streaming is no more restricted to short, fiction videos. It extends to areas where the revenue is much greater, such as gaming and online sports viewing.

Gamers have traditionally used dedicated consoles and PCs, but games like PUBG are pulling them towards their smartphones and forcing them to stream online. And with Google’s new gaming platform, Stadia, announcing that it needs a 25 MBPS connection for flawless streaming, online game streaming could become a pipe dream for most in India. Thankfully, we can use AI to provide better QoE at low bandwidth and therefore, lower cost.

“We also found that 53.98% respondents were willing to pay a premium to watch a specific content with limited load or buffer time,” Olemann elaborates. “And so, we are building a technology to allow users to download 1080p or even 2K content at the cost of low resolution (240–480p) content download. This will help significantly reduce the data needed, time taken, and storage device required to save and watch your favourite videos.

The bigger picture, where superior quality video is linked with higher revenue for OTT platforms and lower costs for the consumers, is now coming into view. And its benefits — such as personalisation or using AI to generate content — will extend even when the current problem of unilateral reliance on network conditions and high buffering is solved.

AI and deep neural network-based enhancements are the biggest innovation in online streaming. And very soon, they will dramatically improve user QoE and alter video consumption forever.